LlamaChat

About LlamaChat

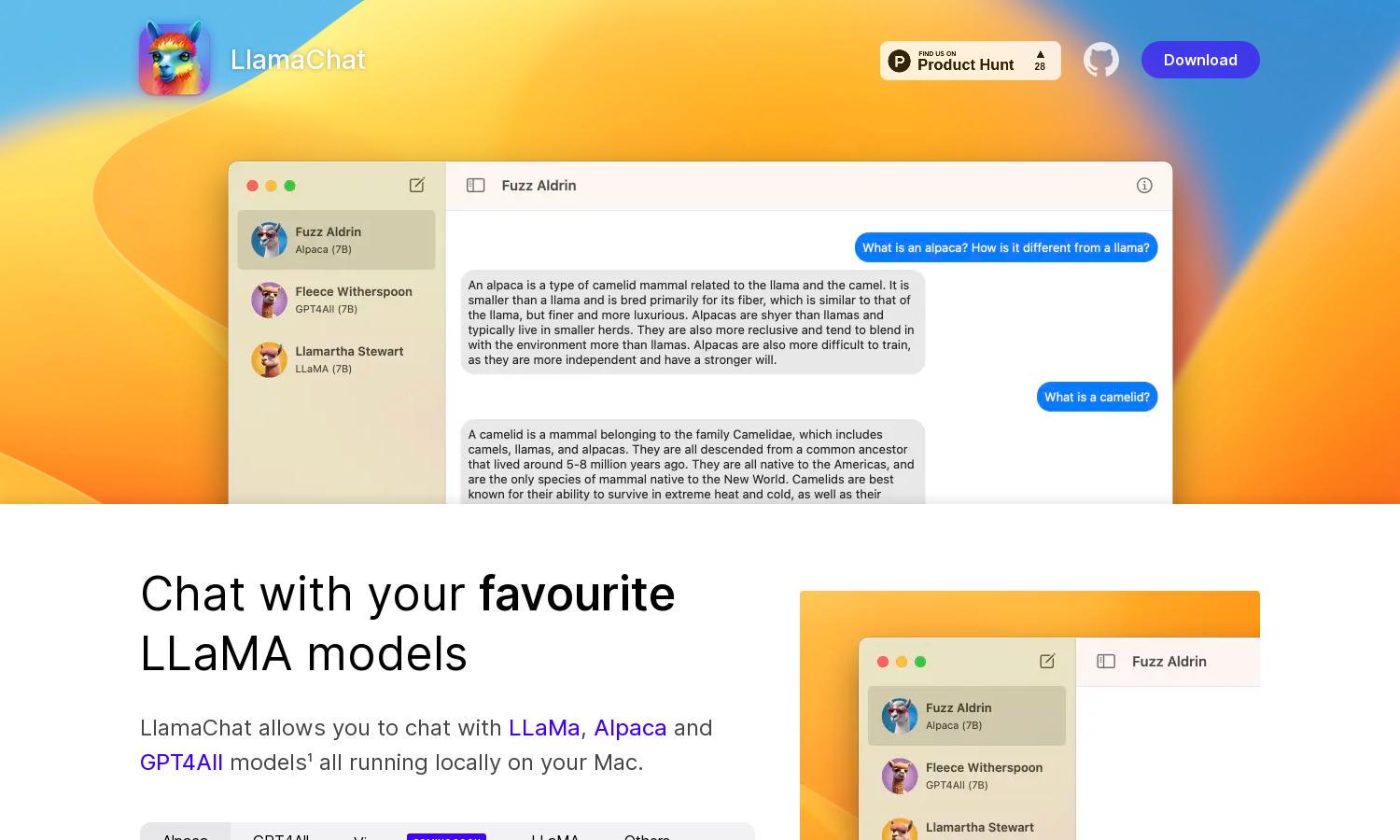

LlamaChat is an innovative platform that lets users interact with LLaMA LLM models like Alpaca and GPT4All from their Mac. It offers a seamless, locally-hosted chat experience, empowering users to fine-tune and communicate with powerful AI models. Enjoy an intuitive interface and open-source freedom with LlamaChat.

Pricing for LlamaChat is simple and free. As a fully open-source platform, it requires no subscriptions or fees, making it accessible for everyone. Users can download the application and integrate models as needed, ensuring a cost-effective solution for exploring AI capabilities without financial barriers.

LlamaChat features a clean and intuitive user interface, designed for effortless navigation and interaction with AI models. Its seamless layout enhances user experience, allowing users to focus on chatting with LLaMA models. Enjoy user-friendly functionalities that make using LlamaChat a pleasant experience.

How LlamaChat works

Users interact with LlamaChat by downloading the application onto their Mac and selecting their preferred LLaMA model, like Alpaca or GPT4All. After onboarding, they can import model files and start chatting with AI models locally, allowing for a quick and user-friendly process for accessing powerful AI capabilities.

Key Features for LlamaChat

Chat with LLaMA Models

The standout feature of LlamaChat is its ability to chat with various LLaMA models, including Alpaca and GPT4All, all locally on your Mac. This functionality offers users a unique, interactive experience with AI models without requiring internet access, enhancing the privacy and user experience.

Open-source Integration

LlamaChat allows users to easily import raw PyTorch model checkpoints or pre-converted .ggml files, streamlining the process of model integration. This feature ensures that users can effortlessly access and utilize their preferred models while benefiting from the flexibility of LlamaChat's open-source nature.

Local Model Hosting

A key feature of LlamaChat is its local model hosting capability, allowing users to run powerful LLaMA models directly on their Mac. This functionality provides unparalleled convenience and control, ensuring that users can enjoy a responsive AI experience without relying on cloud services or internet access.

You may also like: