Agenta

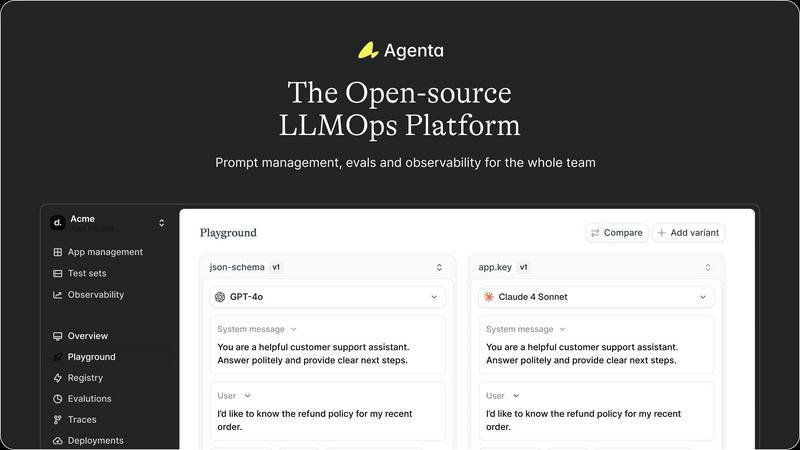

Agenta is the open-source platform for teams to build and manage reliable LLM applications together.

Visit

About Agenta

Agenta is an open-source LLMOps platform engineered to solve the fundamental chaos of modern LLM application development. It acts as a centralized command center for AI teams, bridging the critical gap between rapid experimentation and reliable production deployment. The platform is built for collaborative teams comprising developers, product managers, and subject matter experts who are tired of scattered prompts in Slack, siloed workflows, and the perilous "vibe testing" of changes before shipping. Agenta's core value proposition is transforming unpredictability into a structured, evidence-based process. It provides the integrated tooling necessary to implement LLMOps best practices, enabling teams to systematically experiment with prompts and models, run automated and human evaluations, and gain deep observability into production systems. By offering a single source of truth for the entire LLM lifecycle, Agenta empowers organizations to build, evaluate, debug, and ship AI applications with confidence, moving from guesswork to governance.

Features of Agenta

Unified Experimentation Playground

Agenta provides a model-agnostic playground where teams can compare different prompts, parameters, and foundation models side-by-side in real-time. This central hub eliminates the need for scattered scripts and documents, allowing for rapid iteration. Every change is automatically versioned, creating a complete audit trail. A powerful capability allows any problematic trace from production to be instantly saved as a test case and loaded into the playground for debugging, directly closing the feedback loop between operations and development.

Automated and Flexible Evaluation Framework

The platform replaces subjective guesswork with a systematic evaluation process. Teams can define test sets and leverage a variety of evaluators, including LLM-as-a-judge setups, built-in metrics, or custom code. Evaluations can assess not just final outputs but the entire reasoning trace of complex agents, pinpointing failures in intermediate steps. This framework also integrates human evaluation, allowing domain experts to provide feedback directly within the workflow, ensuring both automated and qualitative metrics guide development.

Production Observability and Tracing

Agenta offers comprehensive observability by tracing every LLM request end-to-end. Teams can drill down into these traces to find the exact point of failure in a complex chain or agentic workflow. Any trace can be annotated by the team or by end-users for feedback and, crucially, turned into a test case with a single click. This capability is bolstered by live monitoring that can run online evaluations on production traffic to detect performance regressions immediately.

Collaborative Workflow for Cross-Functional Teams

Designed for the entire AI product team, Agenta breaks down silos. It provides a safe, user-friendly UI for domain experts and product managers to edit prompts, run experiments, and compare results without writing code. Simultaneously, it offers full API parity for developers, enabling programmatic management that integrates seamlessly with existing CI/CD pipelines and tools like LangChain or LlamaIndex. This creates a unified hub where business logic and technical implementation converge.

Use Cases of Agenta

Enterprise AI Product Development

Large organizations developing customer-facing LLM applications (like chatbots, copilots, or content generators) use Agenta to establish a governed, collaborative development process. It enables product managers to define evaluation criteria, subject matter experts to refine prompts for accuracy, and engineers to manage deployment, all while maintaining version control and auditability to meet compliance and reliability standards.

AI Startup Prototyping and Scaling

Startups moving fast from prototype to product leverage Agenta to maintain velocity without sacrificing quality. The platform allows small teams to quickly test hypotheses with different models and prompts, validate improvements with automated evaluations before deployment, and immediately debug issues using production traces, ensuring they ship reliable features and iterate based on evidence, not intuition.

Complex Agentic System Debugging

Teams building sophisticated multi-step AI agents face immense debugging challenges. Agenta's trace-level observability is critical here, allowing developers to visualize the entire reasoning chain, identify which tool call or step failed, and isolate the problem. The ability to save failing traces as tests ensures these edge cases are permanently addressed in the evaluation suite.

Regulatory and Compliance-Driven AI Projects

In industries like finance, healthcare, or legal tech, where AI outputs must be accurate and auditable, Agenta provides the necessary framework. It documents every prompt version, evaluation result, and production decision. This creates an immutable record for audits, demonstrates due diligence in model validation, and ensures that any change to the system is systematically tested and approved.

Frequently Asked Questions

Is Agenta truly open-source?

Yes, Agenta is a fully open-source platform. The core codebase is publicly available on GitHub, allowing users to inspect, modify, and deploy the software independently. This model ensures transparency, prevents vendor lock-in, and enables a community of contributors to improve the tool. The company supports this community with resources, a public roadmap, and a dedicated Slack channel for collaboration.

How does Agenta integrate with existing AI stacks?

Agenta is designed for seamless integration. It is model-agnostic, working with APIs from OpenAI, Anthropic, Google, open-source models, and others. It offers SDKs and APIs that integrate smoothly with popular frameworks like LangChain and LlamaIndex, allowing developers to incorporate Agenta's experiment tracking, evaluation, and observability features into their existing applications with minimal code changes.

Can non-technical team members really use Agenta effectively?

Absolutely. A key design principle of Agenta is democratizing LLM development. The platform includes an intuitive web UI that allows product managers, domain experts, and other non-coders to safely experiment with prompts in a playground, configure and view evaluation results, and provide feedback on production traces. This empowers the whole team to contribute their expertise directly to the AI's development.

What is the difference between offline and online (live) evaluations?

Offline evaluations are run on static test datasets to validate changes before deployment. Agenta excels at this. Online (or live) evaluations are a more advanced feature where the system continuously monitors real production traffic, applying lightweight evaluation checks to detect regressions in real-time. This allows teams to catch issues that only appear under real-world usage patterns immediately, not just during pre-release testing.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs